Data Centers

A data center is a physical or cloud-based environment that provides compute resources, data storage, and network connectivity to run applications, deliver digital services, and handle critical information securely and reliably. Modern data centers can operate on-premises, in the cloud, or at the edge, offering scalable and resilient infrastructure for businesses of all sizes.

Why are Data Centers Important?

Data centers are important because they host the systems and applications a business relies on every day. They provide the computing power, storage, and network infrastructure that keep operations running and data accessible. Without a stable data center environment, core business functions slow down or stop entirely.

Businesses use data centers to support:

Communication & Productivity

Email, file sharing, internal communication, and workplace software all rely on stable compute and storage.

Virtual Workspaces

Virtual desktops and collaboration environments run on data center infrastructure for performance and reliability.

Business & Data Systems

CRM, ERP, and other business databases run in data centers to ensure secure, low-latency, always-available access.

AI & High-Performance Computing

Machine learning, big data pipelines, RAG systems, and inference workloads all run in data centers.

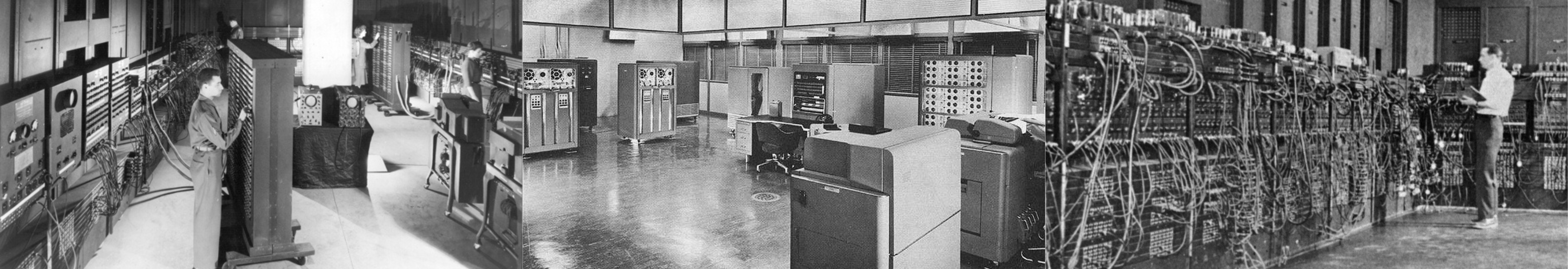

The Evolution of Data Centers

Data centers started as large machine rooms in the 1940s and 1950s, where early computers like ENIAC occupied entire rooms and required strict environmental control. Over time, computing became more compact and reliable, leading to outsourced IT services and dedicated data center providers.

1990s

From Mainframes to Modern Data Centers

Microprocessors, the rise of the internet, and client–server architecture enabled a shift from monolithic systems to racks of smaller servers.

Standardized 19-inch racks, structured cabling, and organized layouts defined the modern data center.

Commercial hosting and early colocation providers emerged, offering shared, professionally managed environments.

2000s

Virtualization & CloudData center construction accelerated with the dot-com boom, then shifted to efficiency after the downturn.

Virtualization matured, allowing multiple workloads to run on a single physical machine and improving space, power, and cooling efficiency.

Cloud computing gained traction, letting organizations consume compute and storage on demand instead of investing in hardware.

2010s

Hyperscale ArchitecturesHyperscale facilities, such as Google’s 2006 site in The Dalles, Oregon, demonstrated warehouse-scale computing and high levels of automation.

Other large technology providers adopted similar designs as global online services and cloud platforms expanded.

2020s & Beyond

Cloud, Edge & AIModern data centers now support cloud applications, IoT ecosystems, automation platforms, and AI workloads that require high-density compute and specialized cooling.

Organizations continue to migrate from traditional on-premises environments to colocation, cloud, and edge facilities for better scalability, energy efficiency, and connectivity.

Timeline of key data center architecture milestones

Data Center Growth and Power Demand

Estimated global power consumption.

Expected demand with steady cloud + AI growth.

~4% of worldwide electricity use.

Up from 147 TWh in 2023.

Data center growth is most accurately measured by the amount of power a facility can deliver. Instead of physical footprint, operators use installed capacity, expressed in megawatts (MW), to indicate how much compute, storage, and cooling the site can support. As high-density servers, AI accelerators, and advanced cooling technologies become standard, MW capacity has become the clearest indicator of industry expansion and the rising energy demand of modern workloads.

By 2027, the forecasted capacity demand is ~84 GW under the base scenario, which assumes steady growth in cloud computing, broader adoption of AI workloads, and continued deployment of high-density servers across hyperscale and colocation facilities. This baseline may shift if AI adoption slows down. In more muted scenarios, demand could fall 9–13 GW below the current estimate.

AI Set to Drive a Major Shift in Data Center Power Consumption

Today, global data center power use is dominated by cloud services (54%), followed by traditional workloads (32%), and AI workloads (14%).

By 2027, AI's share is projected to rise to 27%, making it one of the fastest-growing contributors to global electricity demand.

In another estimate, McKinsey projects that by 2030, data centers could consume 1,400 TWh of electricity, or roughly 4% of worldwide power demand. In the US alone, McKinsey’s medium scenario projects data center electricity demand rising from 147 TWh in 2023 to 606 TWh by 2030. The outlook shows a consistent year-over-year increase, with demand nearly doubling by 2027 and more than quadrupling by the end of the decade.

Sources: McKinsey, Goldman Sachs

Main Data Center Components

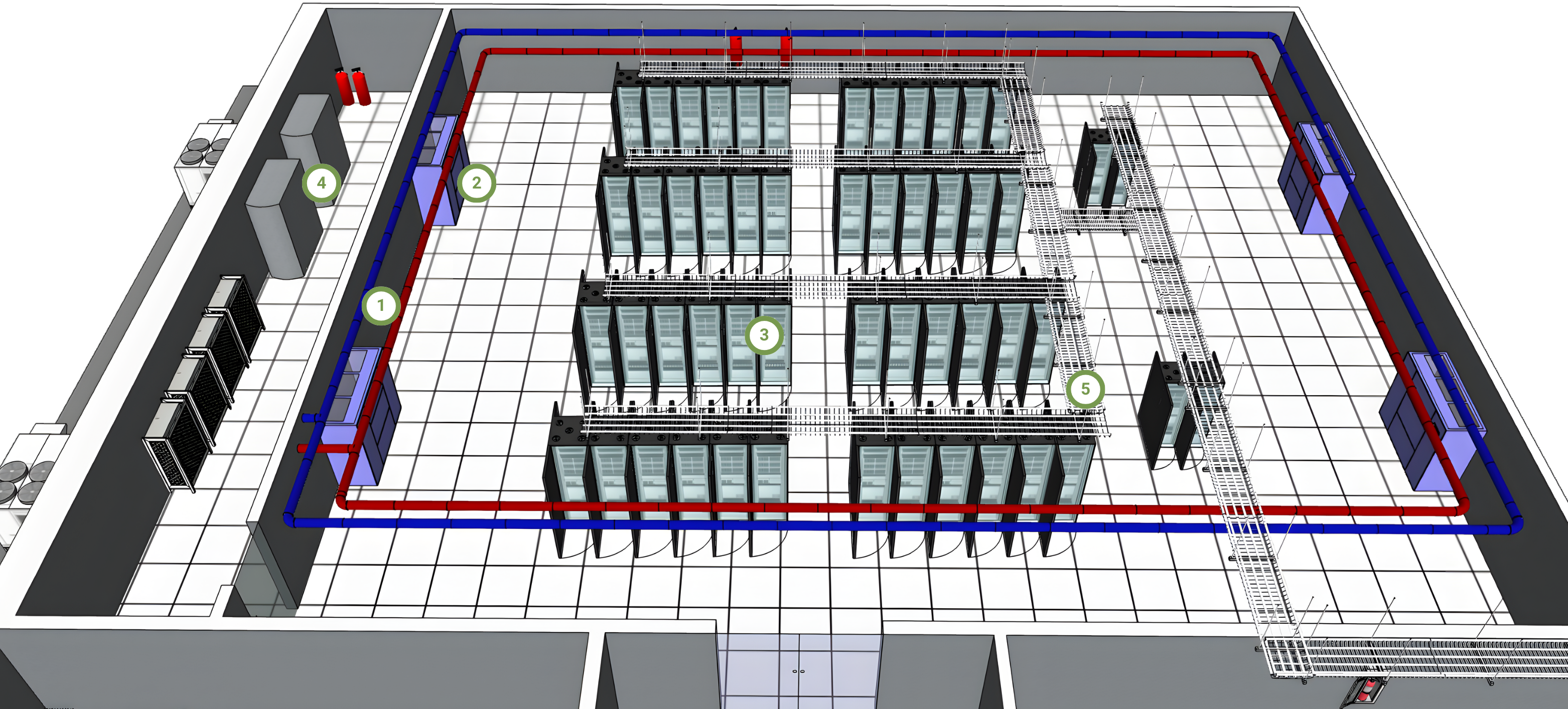

Three Main Data Center Components and Their Operational Implications

Data center components group naturally into three interconnected systems. As workloads grow, especially those driven by cloud and AI, each system faces new performance and scalability requirements:

Must evolve to remove higher heat loads

Increasing rack power density and GPU-driven thermal output require advanced cooling methods including liquid, hybrid, or direct-to-chip systems.

Requires denser racks and optimized spaces

AI accelerators and modern compute clusters demand compact rack layouts, improved airflow management, and higher equipment consolidation.

Higher energy demand requires upgraded electrical systems, increased per-rack power delivery, and resilient infrastructure to prevent downtime.

CAPLINQ Solutions for Data Centers

As rack densities increase, heat loads rise sharply, especially with graphics processing unit (GPU) clusters and AI accelerators that can exceed 40–80 kW per rack. Effective heat transfer becomes critical across cold plates, chillers, heat exchangers, and pump systems. Reliable power delivery and advanced packaging place similar stress on materials throughout the data center. CAPLINQ materials enhance thermal conductivity, electrical insulation, and long-term reliability within these cooling, compute, and power assemblies.

- Thermal interface materials (TIMs): gels (one-part or two-part), pads, and phase-change materials

- Gap fillers for cold plates, chillers, and liquid-cooled manifolds

- Thermally conductive greases

- Heat transfer fluids for direct-to-chip cooling

- Dielectric fluids for immersion cooling

- Encapsulants, underfills, and die-attach materials for 2.5D, 3D semiconductor, and wafer-level packaging

- EMI/RFI shielding materials for server and rack enclosures

- Electrically conductive adhesives and tapes for grounding and interconnects

- Conformal coatings for PCB protection

- Structural adhesives and bonding films for chassis and rack assemblies

- Electrical insulation coatings for busbars, switchgear, transformers, and PDUs

- Potting and encapsulation resins for UPS, PDU, and control electronics

- High-temperature and flame-retardant tapes for cable management and transformer insulation

- Arc-resistant and flame-resistant materials for power modules and battery cabinets

- Thermal runaway mitigation materials for UPS battery systems

Further Reading on Data Center Materials

Enhance cooling efficiency, power stability, and compute reliability across high-density data center environments.

Let our engineers recommend the right materials for your cooling, compute, or power systems.